Mastering Measurement: Your Ultimate Guide to Absolute, Relative, and Full Scale (%FS) Error

Have you ever looked at the specification sheet for a pressure transmitter, a flow meter, or a temperature sensor and seen a line item like “Accuracy: ±0.5% FS”? It’s a common specification, but what does it really mean for the data you’re collecting? Does it mean every reading is within 0.5% of the true value? As seen, the answer is a bit more complex, and understanding this complexity is crucial for anyone involved in engineering, manufacturing, and scientific measurement.

Error is an unavoidable part of the physical world. No instrument is perfect. The key is to understand the nature of the error, quantify it, and make sure it’s within acceptable limits for your specific application. This guide will demystify the core concepts of measurement error. It starts with the foundational definitions and then expands into practical examples and crucial related topics, transforming you from someone who just reads the specs to someone who truly understands them.

What Is Measurement Error?

At its heart, measurement error is the difference between a measured quantity and its true, actual value. Think of it as the gap between the world as your instrument sees it and the world as it actually is.

Error = Measured Value – True Value.

The “True Value” is a theoretical concept. In practice, the absolute true value can never be known with perfect certainty. Instead, a conventional true value is used. This is a value provided by a measurement standard or reference instrument that is significantly more accurate (typically 4 to 10 times more accurate) than the device being tested. For instance, when calibrating a handheld pressure gauge, the “conventional true value” would be sourced from a high-precision, laboratory-grade pressure calibrator.

Understanding this simple equation is the first step, but it doesn’t tell the whole story. An error of 1 millimeter is insignificant when measuring the length of a 100-meter pipe, but it’s a catastrophic failure when machining a piston for an engine. To get the full picture, we need to express this error in more meaningful ways. This is where absolute, relative, and reference errors come into play.

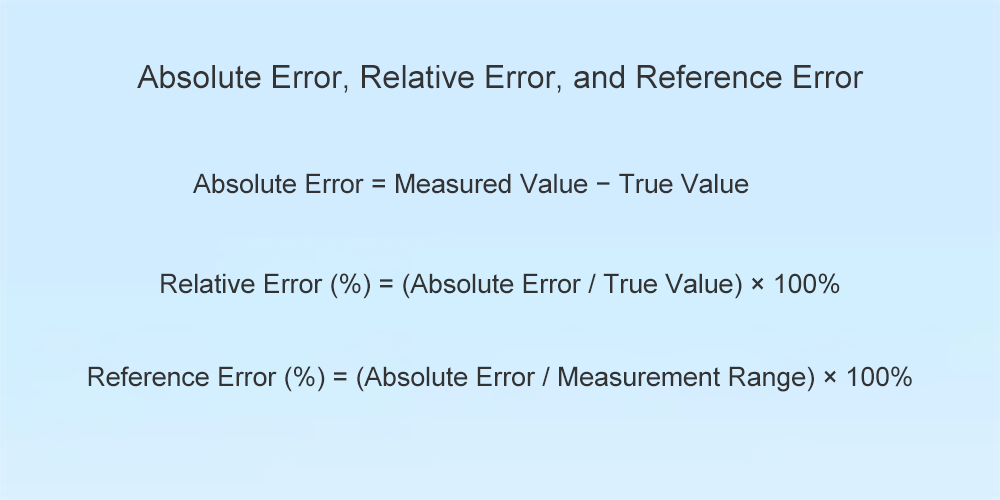

Gathering of Three Common Measurement Errors

Let’s break down the three primary ways to quantify and communicate measurement error.

1. Absolute Error: The Raw Deviation

Absolute error is the simplest and most direct form of error. As defined in the source document, it is the direct difference between the measurement and the true value, expressed in the units of the measurement itself.

Formula:

Absolute Error = Measured Value − True Value

Example:

You are measuring the flow in a pipe with a true flow rate of 50 m³/h, and your flow meter reads 50.5 m³/h, so the absolute error is 50.5 – 50 = +0.5 m³/h.

Now, imagine you are measuring a different process with a true flow of 500 m³/h, and your flow meter reads 500.5 m³/h. The absolute error is still +0.5 m³/h.

When is it useful? Absolute error is essential during calibration and testing. A calibration certificate will often list the absolute deviations at various test points. However, as the example shows, it lacks context. An absolute error of +0.5 m³/h feels much more significant for the smaller flow rate than for the larger one. To understand that significance, we need relative error.

2. Relative Error: The Error in Context

Relative error provides the context that absolute error lacks. It expresses the error as a fraction or percentage of the actual value being measured. This tells you how large the error is in relation to the magnitude of the measurement.

Formula:

Relative Error (%) = (Absolute Error / True Value) × 100%

Example:

Let’s revisit our example:

For the 50 m³/h flow: Relative Error = (0.5 m³/h / 50 m³/h) × 100% = 1%

For the 500 m³/h flow: Relative Error = (0.5 m³/h / 500 m³/h) × 100% = 0.1%

Suddenly, the difference is much clearer. Although the absolute error was identical in both scenarios, the relative error shows that the measurement was ten times less accurate for the lower flow rate.

Why does this matter? Relative error is a much better indicator of an instrument’s performance at a specific operating point. It helps answer the question that “How good is this measurement right now?” However, instrument manufacturers can’t list a relative error for every possible value you might measure. They need a single, reliable metric to guarantee the performance of their device across its entire operational capability. That’s the job of reference error.

3. Reference Error (%FS): The Industry Standard

This is the specification you see most often on datasheets: accuracy expressed as a percentage of Full Scale (%FS), also known as reference error or spanning error. Instead of comparing the absolute error to the current measured value, it compares it to the total span (or range) of the instrument.

Formula:

Reference Error (%) = (Absolute Error / Measurement Range) × 100%

The Measurement Range (or Span) is the difference between the maximum and minimum values the instrument is designed to measure.

The Crucial Example: Understanding %FS

Let’s imagine you buy a pressure transmitter with the following specifications:

-

Range: 0 to 200 bar

-

Accuracy: ±0.5% FS

Step 1: Calculate the Maximum Permissible Absolute Error.

First, we find the absolute error that this percentage corresponds to: max absolute error = 0.5% × (200 bar – 0 bar) = 0.005 × 200 bar = ±1 bar.

This is the most important calculation, which tells us that no matter what pressure we are measuring, the reading from this instrument is guaranteed to be within ±1 bar of the true value.

Step 2: See How This Affects Relative Accuracy.

Now, let’s see what this ±1 bar error means at different points in the range:

-

Measuring a pressure of 100 bar (50% of the range): The reading could be anywhere from 99 to 101 bar. The relative error at this point is (1 bar / 100 bar) × 100% = ±1%.

-

Measuring a pressure of 20 bar (10% of the range): The reading could be anywhere from 19 to 21 bar. The relative error at this point is (1 bar / 20 bar) × 100% = ±5%.

-

Measuring a pressure of 200 bar (100% of the range): The reading could be anywhere from 199 to 201 bar. The relative error at this point is (1 bar / 200 bar) × 100% = ±0.5%.

This reveals a critical principle of instrumentation that an instrument’s relative accuracy is best at the top of its range and worst at the bottom.

Practical Takeaway: How to Choose the Right Instrument?

The relationship between %FS and relative error has a profound impact on instrument selection. The smaller the reference error, the higher the overall accuracy of the instrument. However, you can also improve your measurement accuracy simply by choosing the correct range for your application.

The golden rule of measurement sizing is to select an instrument where your typical operating values fall in the upper half (ideally, the upper two-thirds) of its full-scale range. Let’s go up with an example:

Imagine your process normally operates at a pressure of 70 bar, but can have peaks up to 90 bar. You are considering two transmitters, both with ±0.5% FS accuracy:

-

Transmitter A: Range 0-500 bar

-

Transmitter B: Range 0-100 bar

Let’s calculate the potential error for your normal operating point of 70 bar:

Transmitter A (0-500 bar):

-

Max absolute error = 0.5% × 500 bar = ±2.5 bar.

-

At 70 bar, your reading could be off by 2.5 bar. Your true relative error is (2.5 / 70) × 100% ≈ ±3.57%. This is a significant error!

Transmitter B (0-100 bar):

-

Max absolute error = 0.5% × 100 bar = ±0.5 bar.

-

At 70 bar, your reading could be off by only 0.5 bar. Your true relative error is (0.5 / 70) × 100% ≈ ±0.71%.

By choosing the instrument with the appropriately “compressed” range for your application, you improved your real-world measurement accuracy by a factor of five, even though both instruments had the same “%FS” accuracy rating on their datasheets.

Accuracy vs. Precision: A Critical Distinction

To fully master measurement, one more concept is essential: the difference between accuracy and precision. People often use these terms interchangeably, but in science and engineering, they mean very different things.

Accuracy is how close a measurement is to the true value. It relates to absolute and relative error. An accurate instrument, on average, gives the correct reading.

Precision is how close multiple measurements of the same thing are to each other. It refers to the repeatability or consistency of a measurement. A precise instrument gives you nearly the same reading every single time, but that reading isn’t necessarily the correct one.

Here’s the target analogy:

-

Accurate and Precise: All your shots are tightly clustered in the center of the bullseye. This is the ideal.

-

Precise but Inaccurate: All your shots are tightly clustered together, but they are in the top-left corner of the target, far from the bullseye. This indicates a systematic error, such as a misaligned scope on a rifle or a poorly calibrated sensor. The instrument is repeatable but consistently wrong.

-

Accurate but Imprecise: Your shots are scattered all over the target, but their average position is the center of the bullseye. This indicates a random error, where each measurement fluctuates unpredictably.

-

Neither Accurate nor Precise: The shots are scattered randomly all over the target, with no consistency.

An instrument with a 0.5% FS specification is claiming its accuracy, while the precision (or repeatability) is often listed as a separate line item on the datasheet and is usually a smaller (better) number than its accuracy.

Conclusion

Understanding the nuances of error is what separates a good engineer from a great one.

In summary, mastering measurement error requires moving from basic concepts to practical application. Absolute error provides the raw deviation, relative error places it in the context of the current measurement, and reference error (%FS) offers a standardized guarantee of an instrument’s maximum error across its entire range. The key takeaway is that an instrument’s specified accuracy and its real-world performance are not the same.

By understanding how a fixed %FS error impacts relative accuracy across the scale, engineers and technicians can make informed decisions. Selecting an instrument with the appropriate range for the application is just as crucial as its accuracy rating, ensuring that the collected data is a reliable reflection of reality.

The next time you review a datasheet and see an accuracy rating, you’ll know exactly what it means. You can calculate the maximum potential error, understand how that error will impact your process at different operating points, and make an informed decision that ensures the data you collect is not just numbers on a screen, but a reliable reflection of reality.

Post time: May-20-2025